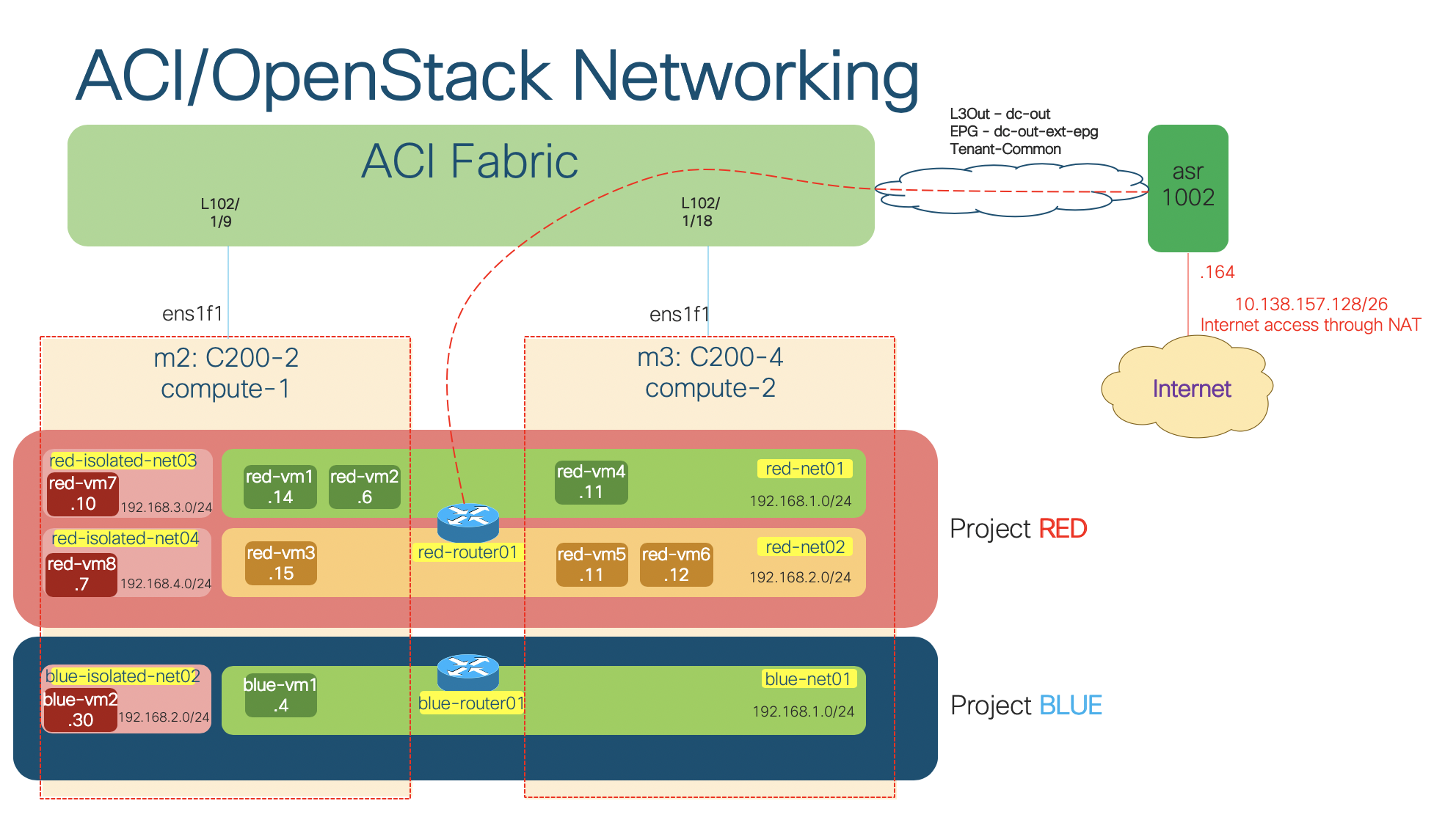

This is part 2 of the deep-dive series into OpenStack networking with Cisco ACI Opflex integration. In the previous post, I have described the different integration modes between ACI and OpenStack (ML2 vs. GBP, OpFlex vs. non OpFlex). We have also seen the constructs (tenants/VRF/BD/EPG/contracts) that the plugin creates automatically on ACI when we create OpenStack objects, as well as the distributed routing feature of the OpFlex OVS.

In this part, we will do some deep-dive into the distributed DHCP and Neutron metadata optimization of the OpFlex agent, i.e. how the OpenStack instances get their IP addresses and metadata with the integration plugin.

Neutron DHCP optimization

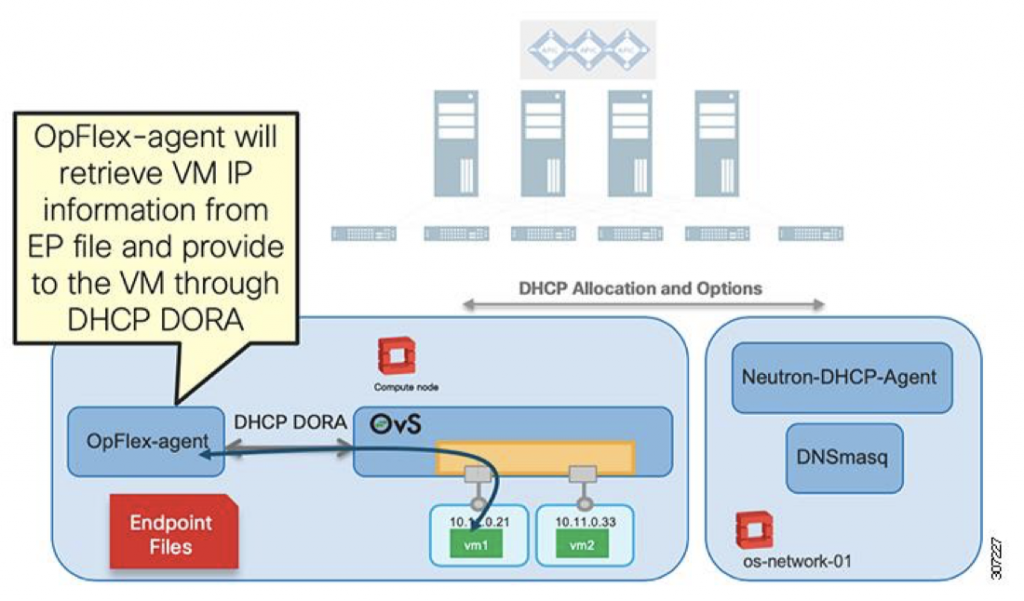

In OpenStack, Neutron DHCP agent running on the controller/network nodes is responsible for providing the instances their IP addresses. This approach has some limitations. The controller/network nodes need to handle DHCP Discover/Offer/Request/Acknowledge (DORA) for all the instances when they boot up. In addtion, midway through the lease period, instances extend their lease by requesting the DHCP server to update their IP addresses. As a result, the controller/network nodes can be the bottleneck for a large deployment.

With OpenStack running in OpFlex mode, the DHCP DORA is handled by the OpFlex Agent running on the compute nodes. The agent communicate over the management network to the Neutron nodes for allocation of IP addressing and DHCP options, and serves the DHCP DORA for the instances running on its host node. This architecture reduces the load for the Neutron nodes, and localizes the DHCP traffic to the compute node itself.

Now let’s see this in action in our lab by examining how an instance, e.g. red-vm1 (192.168.1.14) in network red-net01 of project Red gets its IP address from DHCP.

On a compute node, there will be an endpoint file created in /var/lib/opflex-agent-ovs/endpoints/ for each instance running on the node. Let’s find the endpoint file for red-vm1 (192.168.1.14). We first need to know the port ID associated to this instance:

administrator@ubuntu-lab:~/aci-openstack$ openstack port list | grep "\\.14" | bb6aa59f-8f63-4157-ac2c-7e64607af2a8 | | fa:16:3e:3d:9d:9b | ip_address='192.168.1.14', subnet_id='5f990a09-e481-46ec-a053-1f26c20a9e1b' | ACTIVE|

The endpoint should have the name <port_id>_<mac_address>.ep. Next, let’s examine the content of the endpoint file for red-vm1:

cat /var/lib/opflex-agent-ovs/endpoints/bb6aa59f-8f63-4157-ac2c-7e64607af2a8_fa:16:3e:3d:9d:9b.ep

{

"neutron-metadata-optimization": true,

"dhcp4": {

"domain": "openstacklocal",

"static-routes": [

{

"dest": "0.0.0.0",

"next-hop": "192.168.1.1",

"dest-prefix": 0

},

{

"dest": "169.254.0.0",

"next-hop": "192.168.1.2",

"dest-prefix": 16

}

],

"ip": "192.168.1.14",

"interface-mtu": 1500,

"prefix-len": 24,

"server-mac": "fa:16:3e:21:c5:70",

"routers": [

"192.168.1.1"

],

"server-ip": "192.168.1.2",

"dns-servers": [

"192.168.1.2"

]

},

"access-interface": "tapbb6aa59f-8f",

"ip": [

"192.168.1.14"

],

"promiscuous-mode": false,

"anycast-return-ip": [

"192.168.1.14"

],

"mac": "fa:16:3e:3d:9d:9b",

"domain-name": "DefaultVRF",

"domain-policy-space": "prj_dcf5d773acaa4e2082519f7203fe620e",

"ip-address-mapping": [

{

"mapped-ip": "192.168.1.14",

"policy-space-name": "common",

"floating-ip": "172.18.0.16",

"endpoint-group-name": "juju2-ostack_OpenStack|EXT-dc-out",

"uuid": "32af2d41-4775-4471-ae0b-5f5b9cf39123"

}

],

"endpoint-group-name": "OpenStack|net_82fe08fa-baeb-470d-9c7e-0283f66f7a7d",

"access-uplink-interface": "qpfbb6aa59f-8f",

"neutron-network": "82fe08fa-baeb-470d-9c7e-0283f66f7a7d",

"interface-name": "qpibb6aa59f-8f",

"security-group": [

{

"policy-space": "prj_dcf5d773acaa4e2082519f7203fe620e",

"name": "877683c5-c9aa-4086-a630-7159d7837c4d"

},

{

"policy-space": "prj_dcf5d773acaa4e2082519f7203fe620e",

"name": "aead52d3-f40e-446f-b8f3-b21c818ae899"

},

{

"policy-space": "common",

"name": "juju2-ostack_DefaultSecurityGroup"

}

],

"uuid": "bb6aa59f-8f63-4157-ac2c-7e64607af2a8|fa-16-3e-3d-9d-9b",

"policy-space-name": "prj_dcf5d773acaa4e2082519f7203fe620e",

"attributes": {

"vm-name": "d8665b31-bbbb-4a9b-b23b-3c349231c529"

}The integration plugin populated information in the endpoint files which are used by Opflex agent to provide distributed services for the instances running on the compute node. The DHCP information for the red-vm1 instance can be seen in the following section of the endpoint file

"server-mac": "fa:16:3e:21:c5:70", "routers": [ "192.168.1.1" ], "server-ip": "192.168.1.2", "dns-servers": [ "192.168.1.2"

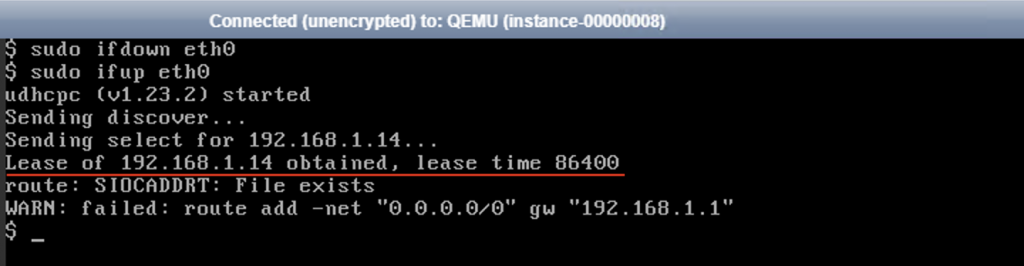

Now on the console of the red-vm1 instance, try releasing and requesting the DHCP again. The instance should get its IP address assigned.

Let’s do some packet walk from the instance. Using ovs-ofctl, we can examine the rules matching DHCP traffic (UDP port 67, 68) from the instances.

On br-int:

ubuntu@os-compute-01:~$ sudo ovs-ofctl dump-flows br-int | grep -E '=67|=68' cookie=0x0, duration=3707486.257s, table=0, n_packets=87, n_bytes=29756, idle_age=35483, hard_age=65534, priority=200,udp,in_port=1,tp_src=68,tp_dst=67 actions=load:0x2->NXM_N) cookie=0x0, duration=3462205.988s, table=0, n_packets=164, n_bytes=54858, idle_age=850, hard_age=65534, priority=200,udp,in_port=5,tp_src=68,tp_dst=67 actions=load:0x6->NXM_NX) cookie=0x0, duration=2760388.805s, table=0, n_packets=67, n_bytes=22930, idle_age=3191, hard_age=65534, priority=200,udp,in_port=7,tp_src=68,tp_dst=67 actions=load:0x8->NXM_NX) cookie=0x0, duration=2760386.336s, table=0, n_packets=67, n_bytes=22941, idle_age=2318, hard_age=65534, priority=200,udp,in_port=9,tp_src=68,tp_dst=67 actions=load:0xa->NXM_NX) cookie=0x0, duration=2760382.385s, table=0, n_packets=65, n_bytes=22232, idle_age=38763, hard_age=65534, priority=200,udp,in_port=11,tp_src=68,tp_dst=67 actions=load:0xc->NXM_) cookie=0x0, duration=2760352.341s, table=0, n_packets=65, n_bytes=22232, idle_age=38742, hard_age=65534, priority=200,udp,in_port=13,tp_src=68,tp_dst=67 actions=load:0xe->NXM_) cookie=0x0, duration=2760333.855s, table=0, n_packets=65, n_bytes=22232, idle_age=38721, hard_age=65534, priority=200,udp,in_port=15,tp_src=68,tp_dst=67 actions=load:0x10->NXM) cookie=0x0, duration=2760303.486s, table=0, n_packets=65, n_bytes=22232, idle_age=38698, hard_age=65534, priority=200,udp,in_port=17,tp_src=68,tp_dst=67 actions=load:0x12->NXM) cookie=0x0, duration=2760289.477s, table=0, n_packets=65, n_bytes=22232, idle_age=38682, hard_age=65534, priority=200,udp,in_port=19,tp_src=68,tp_dst=67 actions=load:0x14->NXM) cookie=0x1, duration=3707485.628s, table=1, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=8192,udp,reg0=0x2,tp_dst=68 actions=resubmit(,3) cookie=0x1, duration=2760388.804s, table=1, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=8192,udp,reg0=0x3,tp_dst=68 actions=resubmit(,3) cookie=0x1, duration=2760303.486s, table=1, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=8192,udp,reg0=0x4,tp_dst=68 actions=resubmit(,3) cookie=0x1, duration=2570683.598s, table=1, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=8192,udp,reg0=0x5,tp_dst=68 actions=resubmit(,3) <omitted> cookie=0x3, duration=3707485.525s, table=2, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=8064,udp,reg0=0x2,tp_dst=67 actions=resubmit(,3) cookie=0x3, duration=2760388.805s, table=2, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=8064,udp,reg0=0x3,tp_dst=67 actions=resubmit(,3) cookie=0x3, duration=2760303.486s, table=2, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=8064,udp,reg0=0x4,tp_dst=67 actions=resubmit(,3) cookie=0x3, duration=2570683.597s, table=2, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=8064,udp,reg0=0x5,tp_dst=67 actions=resubmit(,3) <omitted>

On br-fabric, these DHCP flows will be handled by OVS controller. We can see the OpenFlow rules programmed on the bridge:

ubuntu@os-compute-01:~$ sudo ovs-ofctl dump-flows br-fabric -O OpenFlow13 | grep 'udp' cookie=0x8000000000000004, duration=3708419.225s, table=0, n_packets=87, n_bytes=29756, priority=35,udp,in_port=5,dl_src=fa:16:3e:42:52:64,tp_src=68,tp_dst=67 actions=CONTROLLER:65535 cookie=0x8000000000000004, duration=3463138.884s, table=0, n_packets=164, n_bytes=54858, priority=35,udp,in_port=7,dl_src=fa:16:3e:df:00:9e,tp_src=68,tp_dst=67 actions=CONTROLLER:65535 cookie=0x8000000000000004, duration=2761321.697s, table=0, n_packets=67, n_bytes=22930, priority=35,udp,in_port=8,dl_src=fa:16:3e:3d:9d:9b,tp_src=68,tp_dst=67 actions=CONTROLLER:65535 cookie=0x8000000000000004, duration=2761319.230s, table=0, n_packets=67, n_bytes=22941, priority=35,udp,in_port=9,dl_src=fa:16:3e:a1:b1:2e,tp_src=68,tp_dst=67 actions=CONTROLLER:65535 cookie=0x8000000000000004, duration=2761315.277s, table=0, n_packets=65, n_bytes=22232, priority=35,udp,in_port=10,dl_src=fa:16:3e:3d:4d:be,tp_src=68,tp_dst=67 actions=CONTROLLER:65535 cookie=0x8000000000000004, duration=2761285.235s, table=0, n_packets=65, n_bytes=22232, priority=35,udp,in_port=11,dl_src=fa:16:3e:33:3b:19,tp_src=68,tp_dst=67 actions=CONTROLLER:65535 cookie=0x8000000000000004, duration=2761266.749s, table=0, n_packets=65, n_bytes=22232, priority=35,udp,in_port=12,dl_src=fa:16:3e:d9:19:ae,tp_src=68,tp_dst=67 actions=CONTROLLER:65535 cookie=0x8000000000000004, duration=2761236.381s, table=0, n_packets=65, n_bytes=22232, priority=35,udp,in_port=13,dl_src=fa:16:3e:20:a1:8f,tp_src=68,tp_dst=67 actions=CONTROLLER:65535 cookie=0x8000000000000004, duration=2761222.373s, table=0, n_packets=65, n_bytes=22232, priority=35,udp,in_port=14,dl_src=fa:16:3e:e4:da:de,tp_src=68,tp_dst=67 actions=CONTROLLER:65535

Thể DHCP traffic from the instance has been handled by the OpenFlow controller (actions=CONTROLLER:65535) running locally on the node. There is a DHCP app that takes care of the DHCP DORA locally on the compute node.

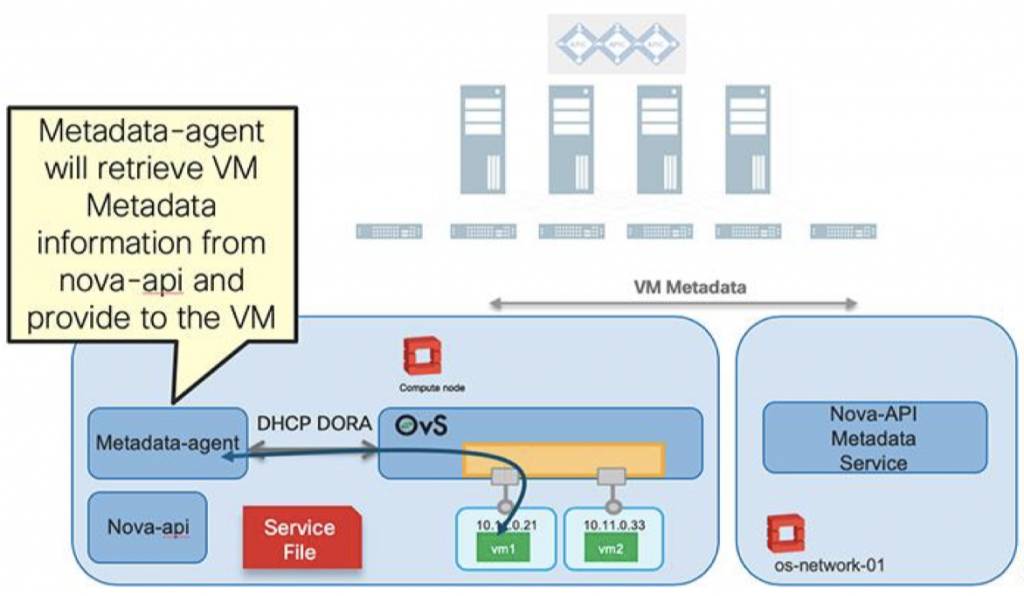

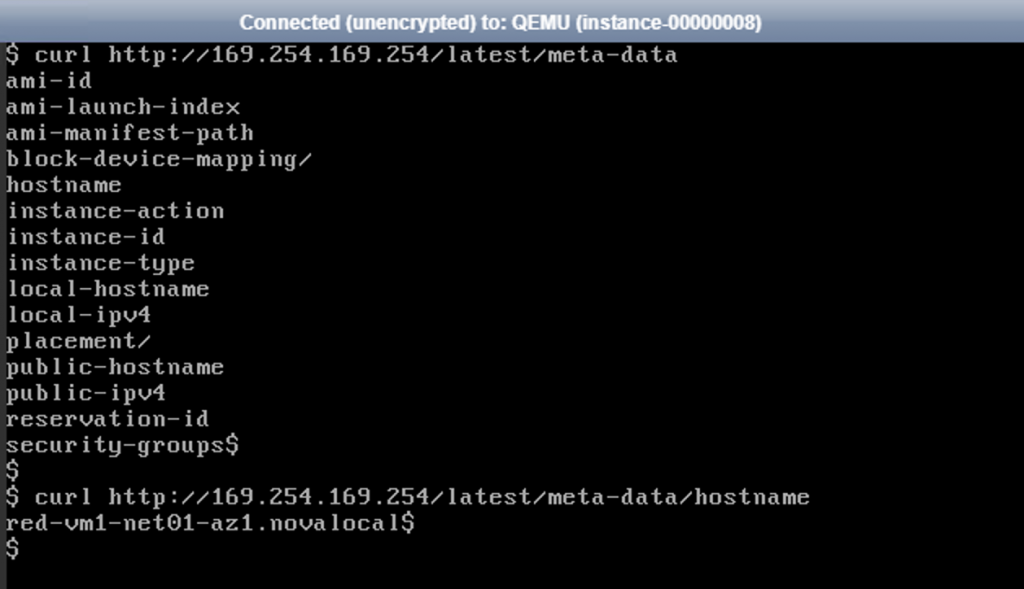

Neutron Metadata optimization

The metadata service provides a way for instances to retrieve instance-specific data via a REST API in their tenant networks. Instances access the metadata service at http://169.254.169.254. Metadata services can be run in both router namespaces (an attached neutron router to serve metadata service in tenant networks) and DHCP namespaces (for isolated networks without Neutron routers) running on Neutron nodes

With OpenStack running in OpFlex mode, metadata services are provided by distributed metadata proxy instances running on compute nodes. The metadata proxy accesses Nova-API and the Nova metadata service running on the OpenStack controller nodes over the management network to deliver VM-specific metadata to each VM instance.

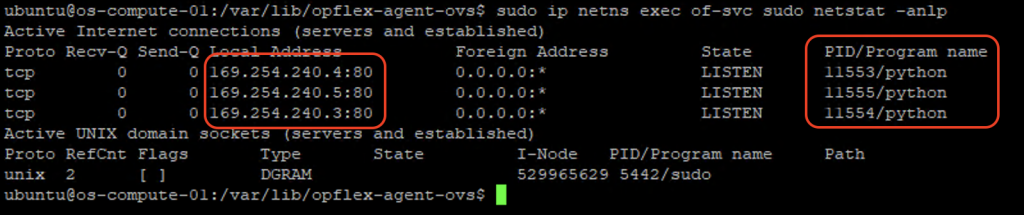

Let’s see this function in action in our lab. On compute nodes that runs OpFlex agent, there is a separate namespace of-svc (OpFlex service) where the agent listens to metadata request on port 80.

sudo ip netns exec of-svc sudo netstat -anlp

The processes are listening on IP address in 169.254.240.x range. We have the PID of the processes, let’s get the process name:

sudo ps aux | grep -E '11553|11554|11555'

Nice! Those are the opflex-ns-proxy processes that handle the metadata service requests. But why are there 3 in our scenario? Let’s find out.

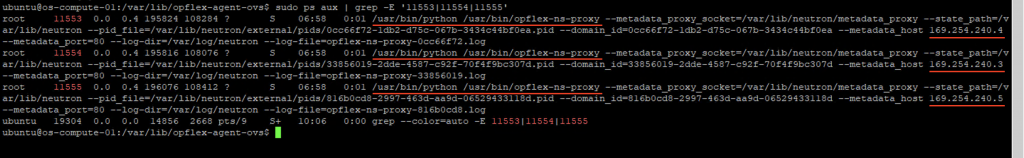

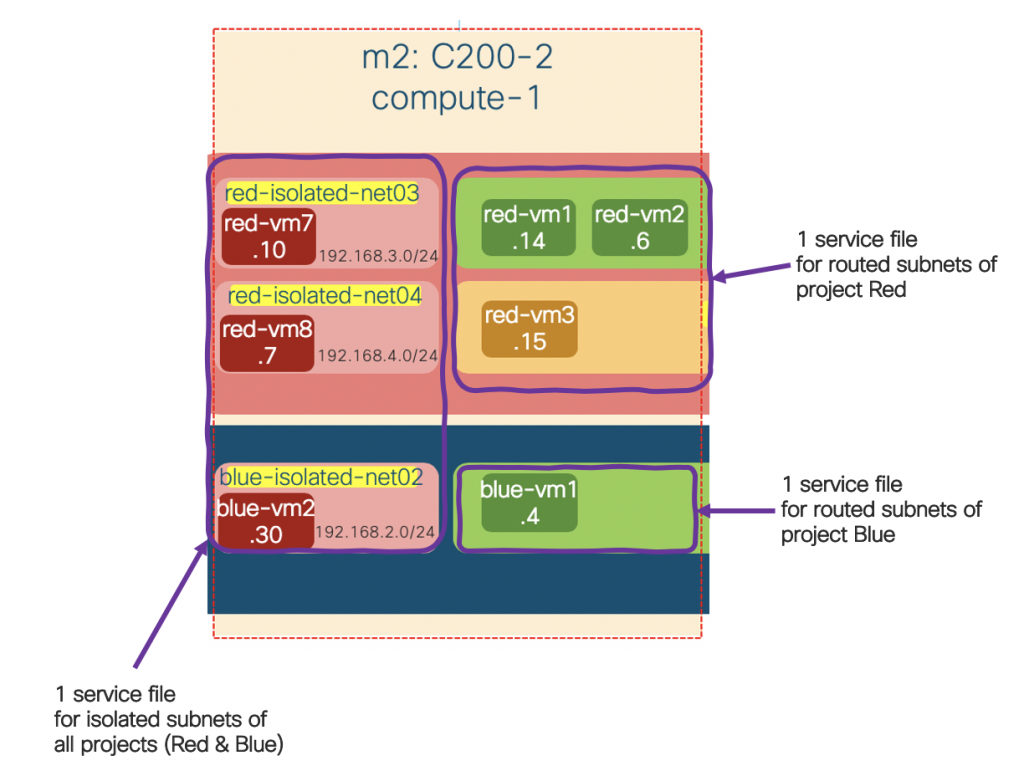

ls /var/lib/opflex-agent-ovs/services/ 0cc66f72-1db2-d75c-067b-3434c44bf0ea.as 33856019-2dde-4587-c92f-70f4f9bc307d.as 816b0cd8-2997-463d-aa9d-06529433118d.as

There are files name <domain_id>.as in /var/lib/opflex-agent-ovs/services/ directory. Let’s see what’s inside:

cat /var/lib/opflex-agent-ovs/services/0cc66f72-1db2-d75c-067b-3434c44bf0ea.as | python -m json.tool cat /var/lib/opflex-agent-ovs/services/33856019-2dde-4587-c92f-70f4f9bc307d.as | python -m json.tool cat /var/lib/opflex-agent-ovs/services/816b0cd8-2997-463d-aa9d-06529433118d.as | python -m json.tool

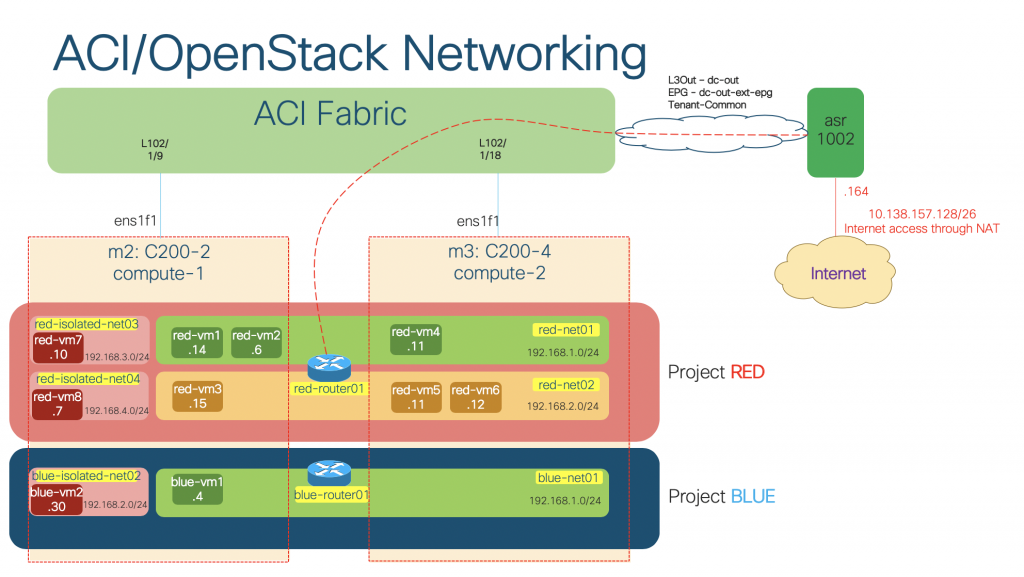

From the output, it turns out that the plugin creates:

- A service file for all routed subnets per project. On ACI, the subnets will be put in a VRF named DefaultVRF in each user tenant.

- A service file for all unrouted subnets (isolated subnets) across ALL projects. On ACI, all these unrouted subnets are put in one VRF named <openstack_instance_id>_UnroutedVRF in tenant common.

As we have networks (routed and isolated) in 2 projects (Red and Blue), there are 3 service files created on compute-1 node as illustrated below:

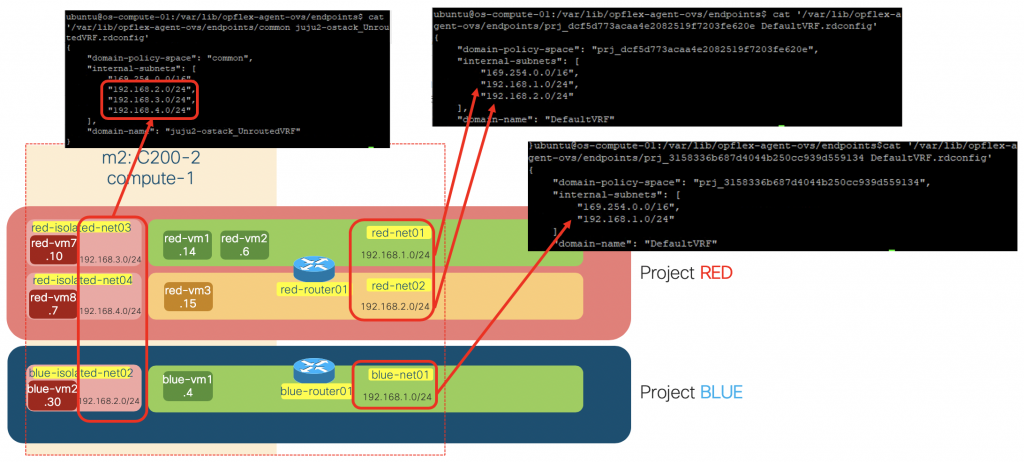

We can also see the subnets being served by these proxy services by checking *.rdconfig file in /var/lib/opflex-agent-ovs/endpoints/ directory

ls /var/lib/opflex-agent-ovs/endpoints/*.rdconfig '/var/lib/opflex-agent-ovs/endpoints/common juju2-ostack_UnroutedVRF.rdconfig' '/var/lib/opflex-agent-ovs/endpoints/prj_3158336b687d4044b250cc939d559134 DefaultVRF.rdconfig' '/var/lib/opflex-agent-ovs/endpoints/prj_dcf5d773acaa4e2082519f7203fe620e DefaultVRF.rdconfig'

cat '/var/lib/opflex-agent-ovs/endpoints/common juju2-ostack_UnroutedVRF.rdconfig' cat '/var/lib/opflex-agent-ovs/endpoints/prj_3158336b687d4044b250cc939d559134 DefaultVRF.rdconfig' cat '/var/lib/opflex-agent-ovs/endpoints/prj_dcf5d773acaa4e2082519f7203fe620e DefaultVRF.rdconfig'

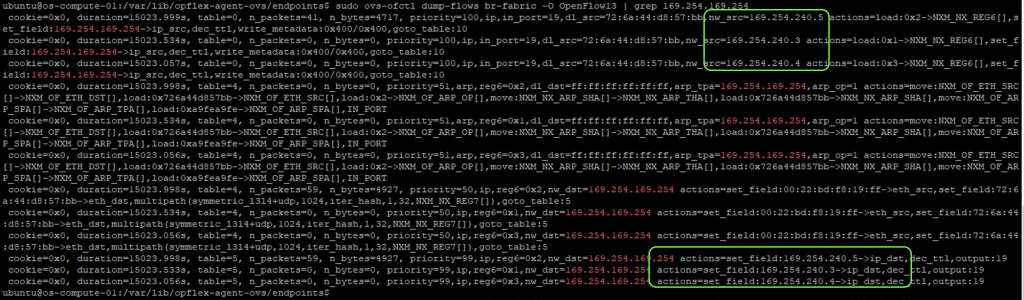

As instances query their metadata on 169.254.169.254, the request is redirected to the corresponding metadata proxy service serving that subnet by OpenFlow rules setting the next-hop. We can visualize these entries using ovs-ofctl

sudo ovs-ofctl dump-flows br-fabric | grep 169.254.169.254

We now understand how the instance get its metadata behind the scenes:

In the next post of the series, we will look at how ACI with the OpenStack plugin handles the routing from OpenStack to the external world in different scenarios.

References

[1] Cisco ACI Unified Plug-in for OpenStack Architectural Overview: https://www.cisco.com/c/dam/en/us/td/docs/switches/datacenter/aci/apic/sw/4-x/openstack/Cisco-ACI-Plug-in-for-OpenStack-Architectural-Overview.pdf