Many people have asked me how HyperFlex and ACI can actually integrate, as they have not found such a guide. So in this blog post, I’ll demonstrate the integration with some a step-by-step instruction using my local lab kit.

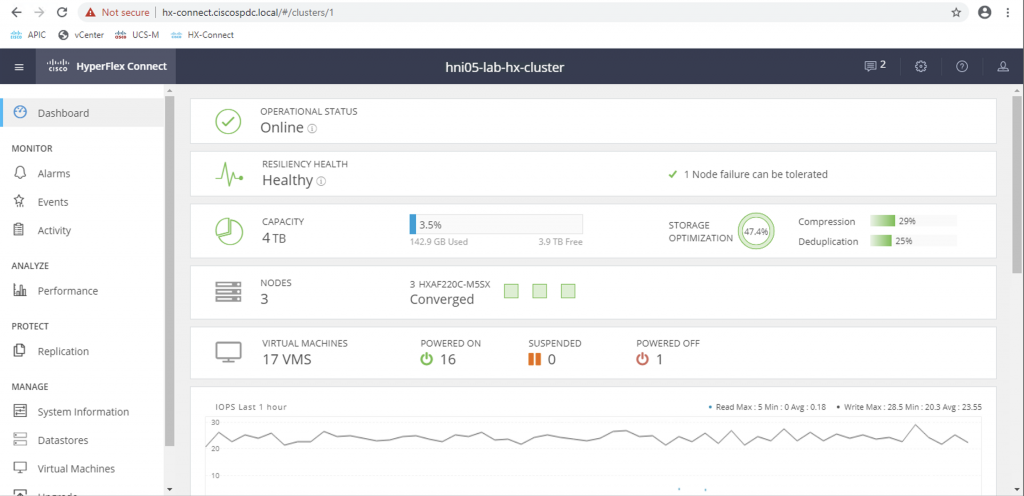

My team has got a 3-node HyperFlex All-Flash M5 cluster running for quite sometime now. Here’s a quick snap of our beautiful gear:

In this post, I will not go over the HyperFlex installation part. I assume that you will install your cluster by following Cisco installation guide. I’ll touch a bit on how to prepare ACI to work as upstream switching network for the HX cluster, and focus on the integration of HyperFlex VMWare cluster with ACI after the cluster has been installed.

The end result is that ACI will provide:

- upstream network connectivities for the Fabric Interconnects (FI)

- integration with the FIs (UCS Manager integration) to automate the provisioning of the necessary VLANs on the appropriate uplinks

- integration with the HX ESXi nodes (VMM domain integration) to automate the creation of DVS port-groups corresponding to the EPGs.

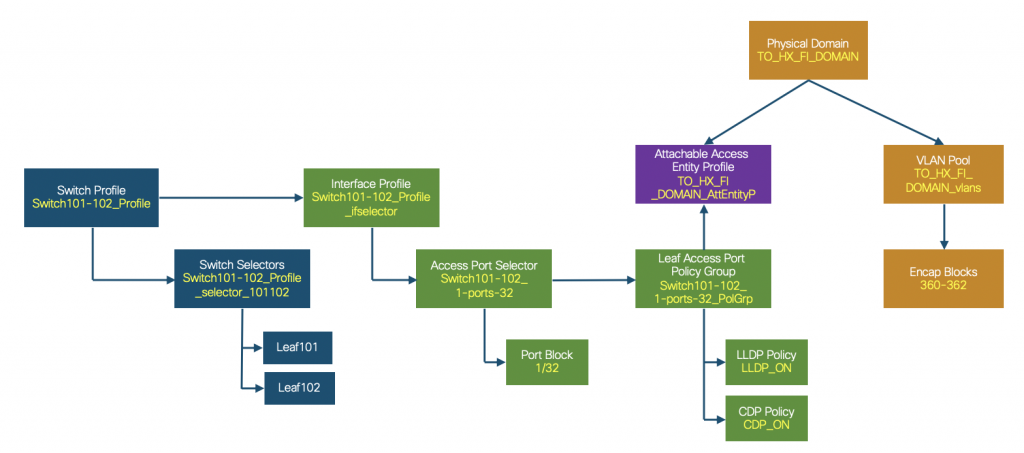

ACI fabric access policies for HX upstream networking

In order to meet HyperFlex networking requirements, we just need to make sure the ACI can provide a Layer-2 switching domain for all the necessary VLANs among the HX nodes. You might want to refer to the Cisco Technotes for further details on these requirements.

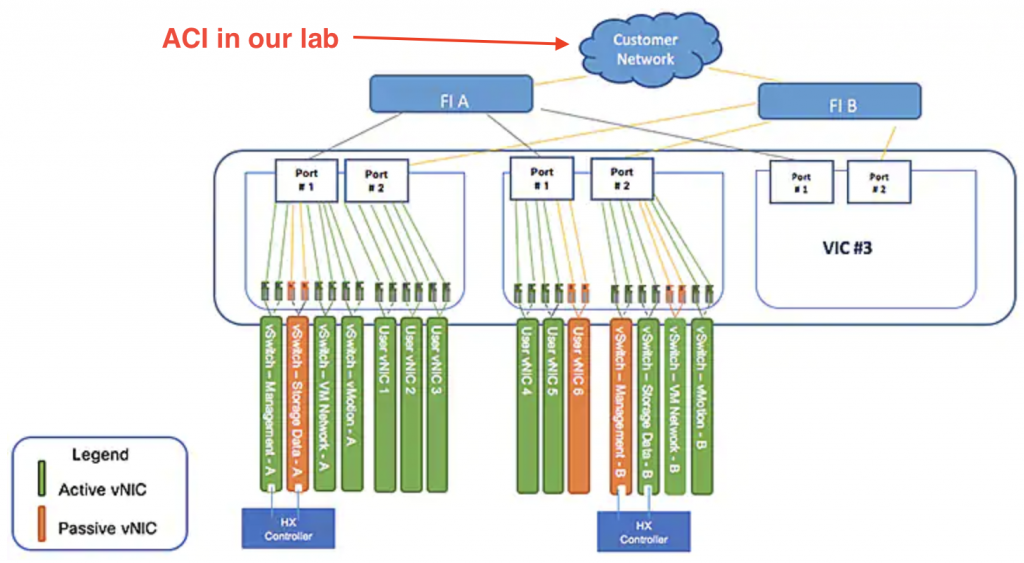

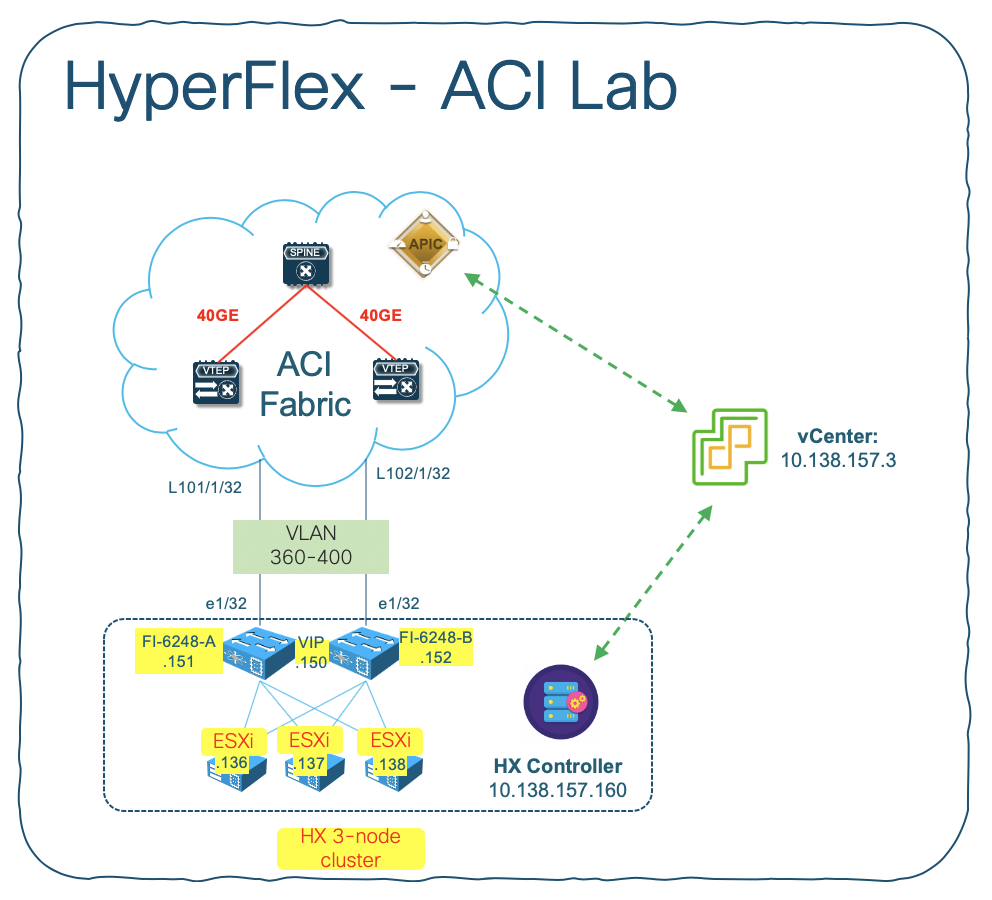

Specifically, the network topology from HX to ACI in our lab will look like below:

We are using the following 4 VLANs for the HX required networks:

- vswitch-hx-inband-mgmt – VLAN360

- vswitch-hx-storage-data – VLAN361

- vswitch-hx-vmotion – VLAN362

- vswitch-hx-vm-network – VLAN363 (*) Later on we will add new VLAN range 364-400 as dynamic VLAN for DVS port-group assignments.

The following figure shows the overall ACI fabric access policies:

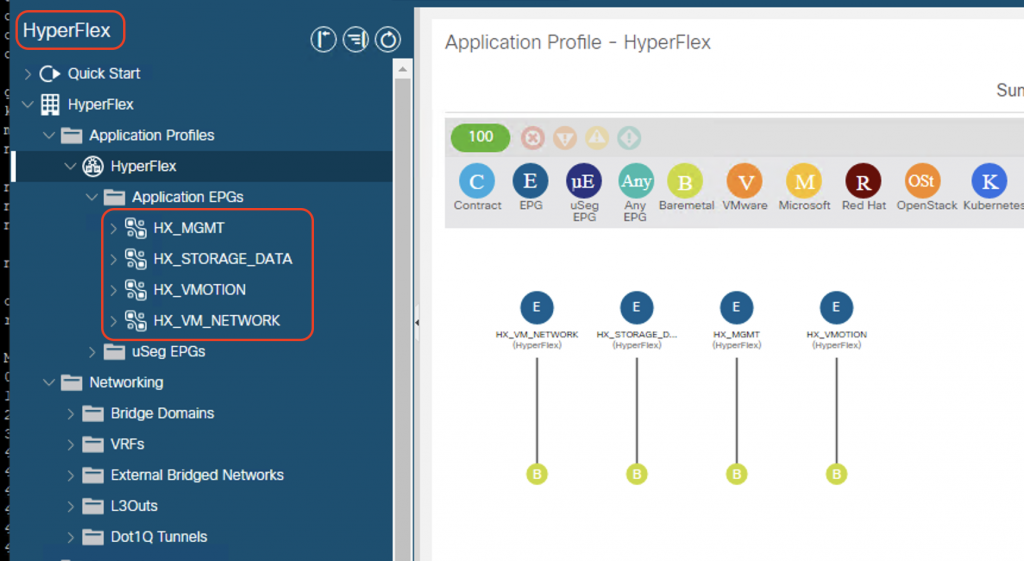

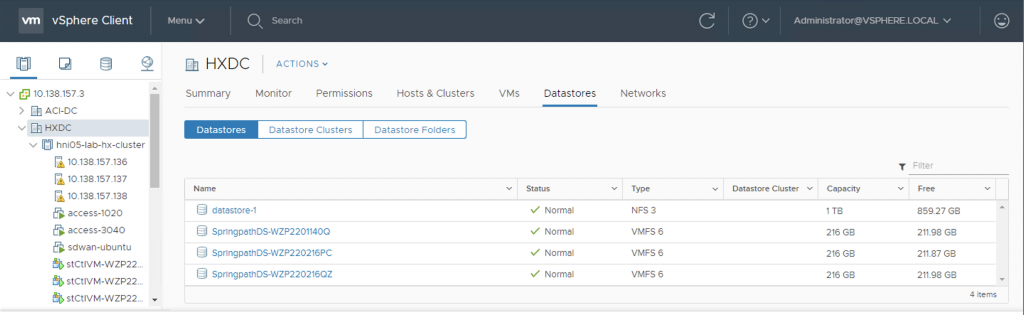

We also need to create an ACI tenant with the appropriate EPGs and static bindings. In my setup, I have set ACI as a pure L2 fabric. It is up to you to put default gateway of the HX nodes on ACI as per your requirements.

As long as you follow the installation guide, you should be able to get the HX cluster up and running without much hassles as most of the work have been automated through Cisco provided installer scripts.

Integrations with FI (UCS Manager integration)

The networking policies on Cisco HX nodes (UCS) can be automated by integrating UCS Manager (UCSM) into ACI. Cisco APIC takes hypervisor NIC information from the UCSM and a virtual machine manager (VMM) to automate VLAN programming on the vNICs.

There are certain prerequisites that must be fulfilled before proceeding with the integrations. As we are using HyperFlex, most of the requirements on UCSM version and vNIC template have been done by HX installer. The UCSM integration is available from APIC release 4.1(1) onwards, so make sure ACI is running the supported version.

Installing the Cisco External Switch app

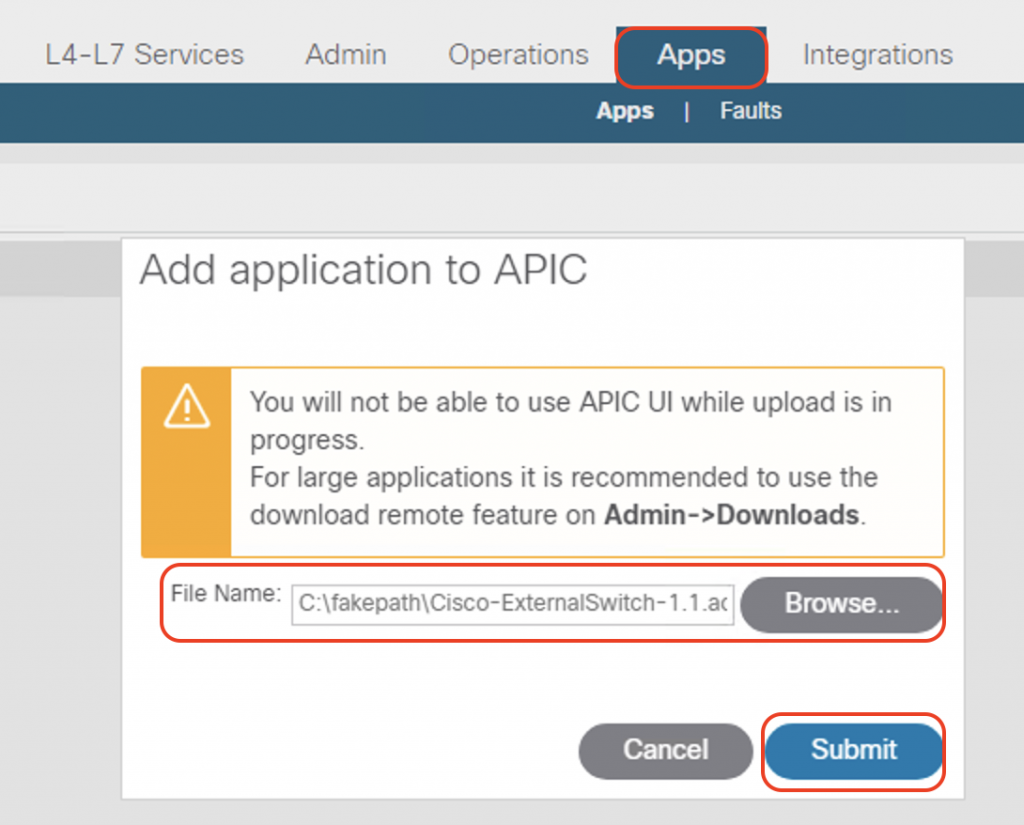

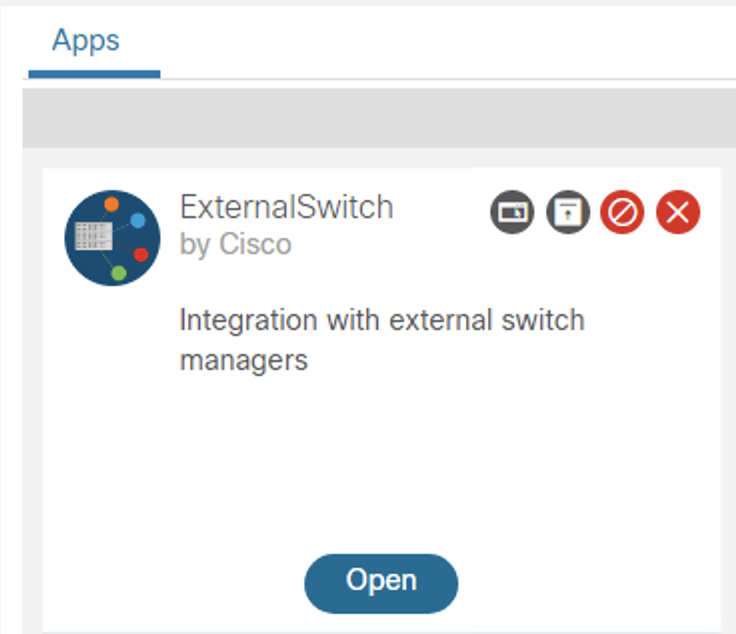

Next, we will need install the Cisco External Switch app on APIC. The app can be downloaded from https://aciappcenter.cisco.com/ and install on APIC via Apps > Add application > Browse the app file –> Submit

Make sure the app has been installed on APIC

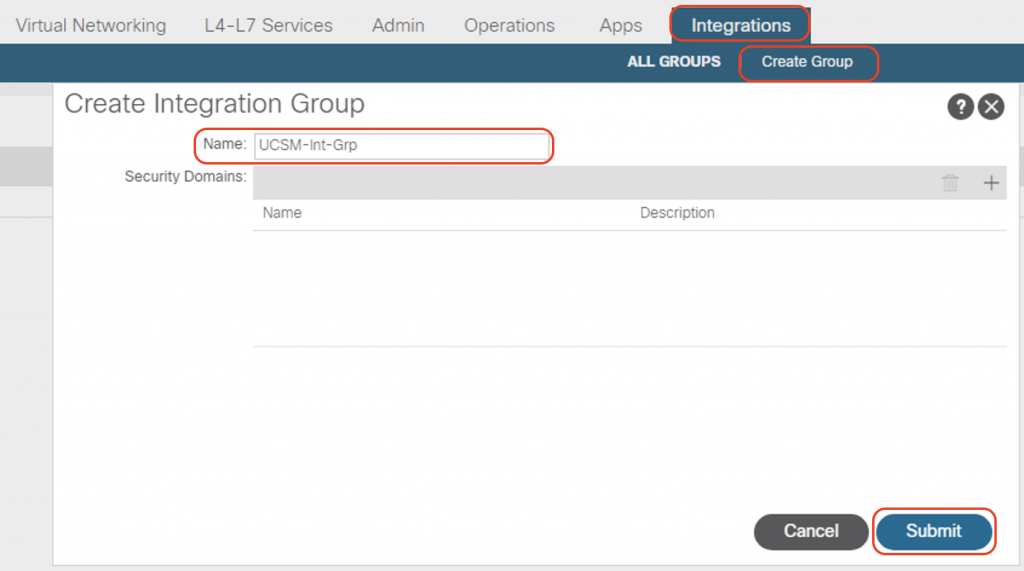

Creating an Integration Group on APIC

On APIC GUI, navigate to Integrations > Create group. In the Name field, enter the name of the integration group, then click Submit.

The integration group will be created on APIC.

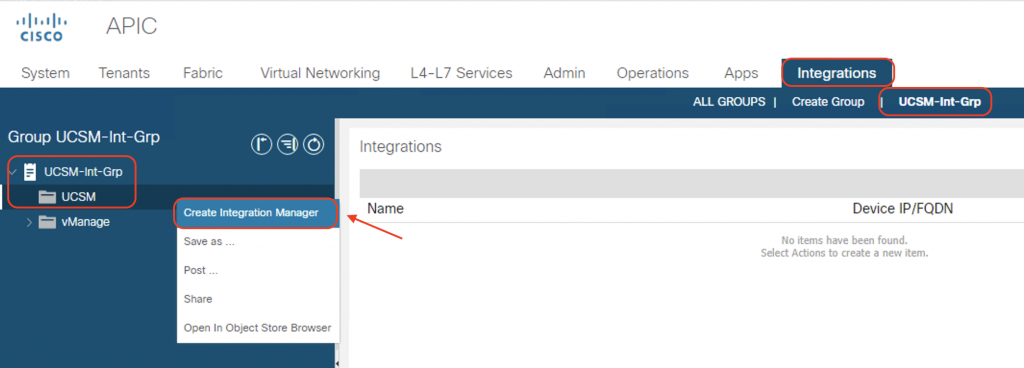

Creating an Integration for the Integration Group

Next, click on the UCSM-Int-Grp integration group we created in the previous step. In the left navigation pane, right-click the UCSM folder and then choose Create Integration Manager.

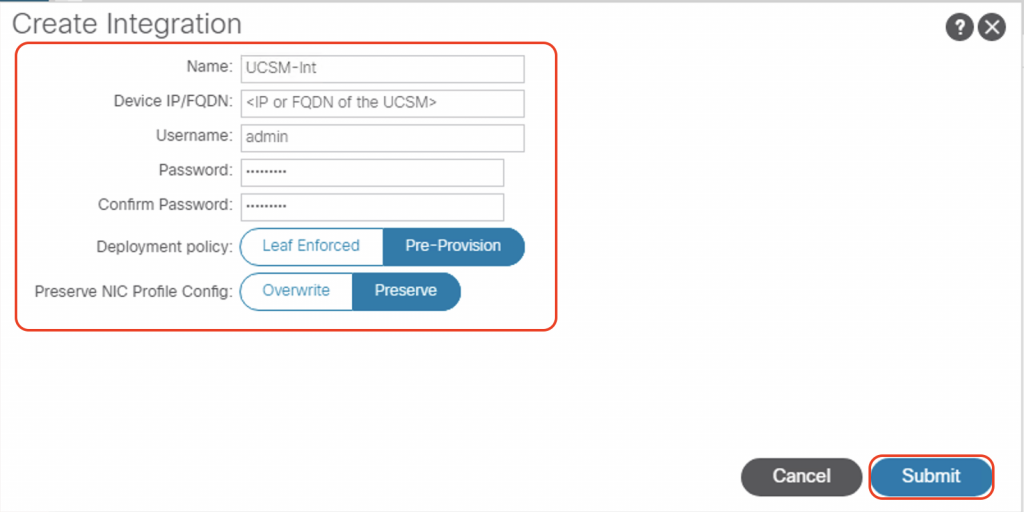

Provide the following:

- Name of the integration: UCSM-Int

- IP or FQDN of the UCS Manager

- Admin credentials of UCS Manager

- Deployment policy: Pre-provision

- Preserve NIC Profile Config: Preserve (we don’t want the existing NIC config to be overwriten by this integration)

Click Submit

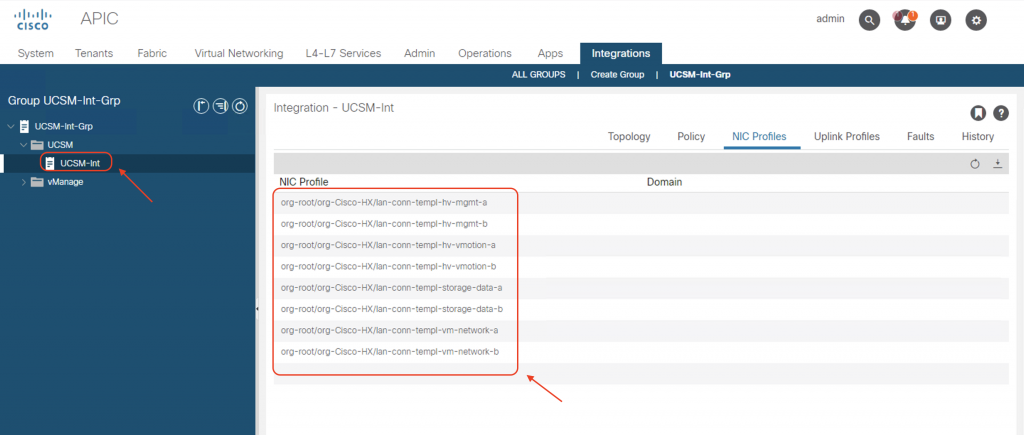

We can see the integration group has been created, and we can navigate to “NIC Profiles” and see all the profiles that have been discovered on the UCSM.

Creating a VMM VDS integration with VMWare and associating the external Switch Manager

I will not go into details on how we create a VMM domain on ACI, as the process has been well covered by many ACI materials. Basically it is the same as we normally integrate ACI with VMWare ESXi on a UCS blade system. You can refer to this official guide from Cisco.

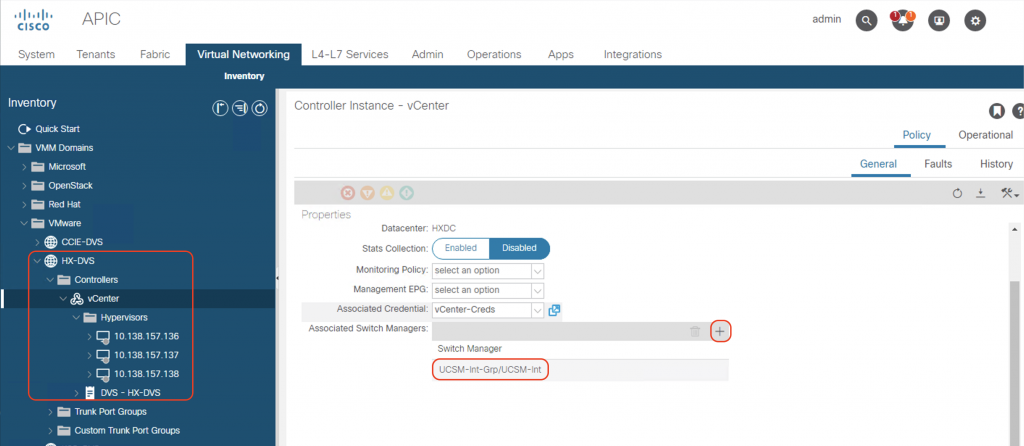

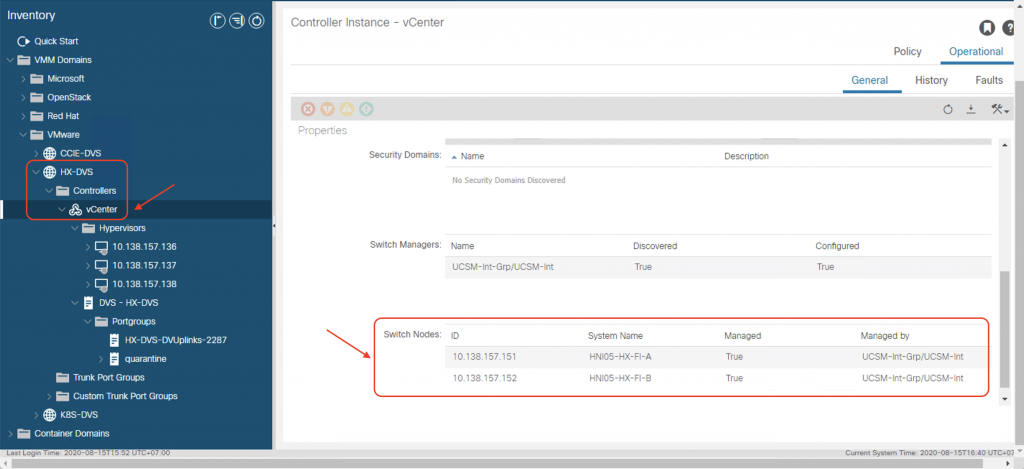

After we have created the VMM domain (HX-DVS in this example), we need to associate the previously created UCSM integration to the VMM domain. In the Associated Switch Managers, click on the plus sign (+) and choose the UCSM-Int integration we created earlier.

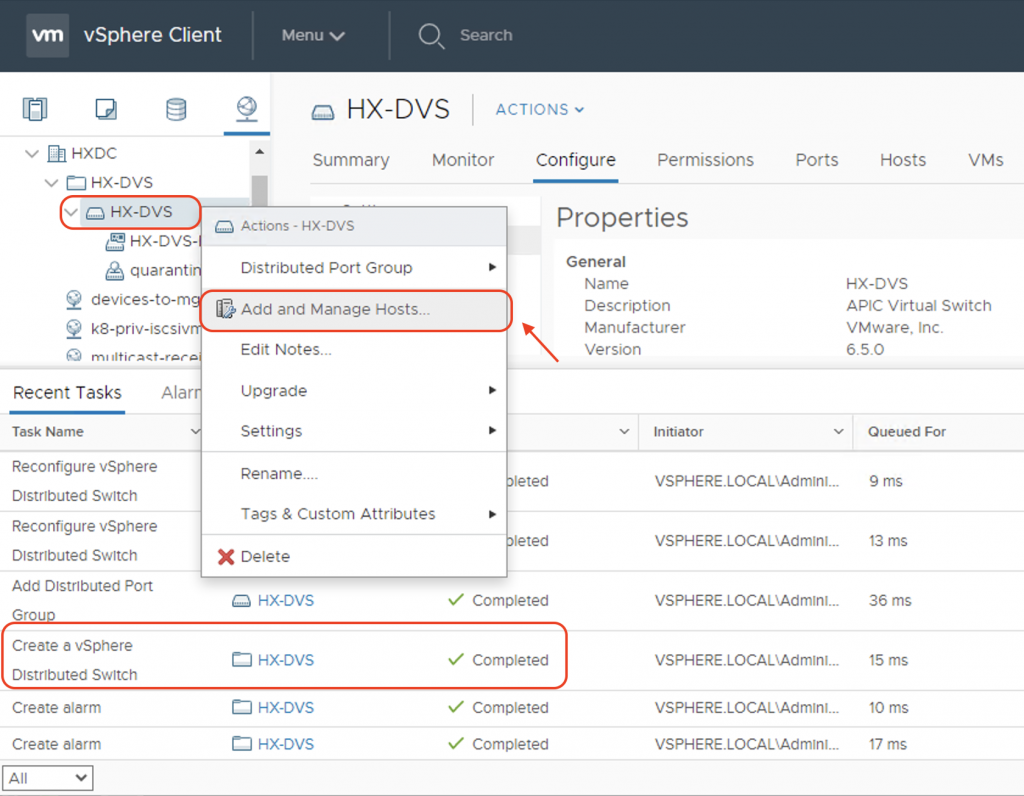

Now, let’s login to vCenter. Similar to any VMWare VMM DVS integration, ACI automatically creates a DVS on vCenter with the same name as the VMM domain. Right click on HX-DVS and choose “Add and Manage Hosts…”

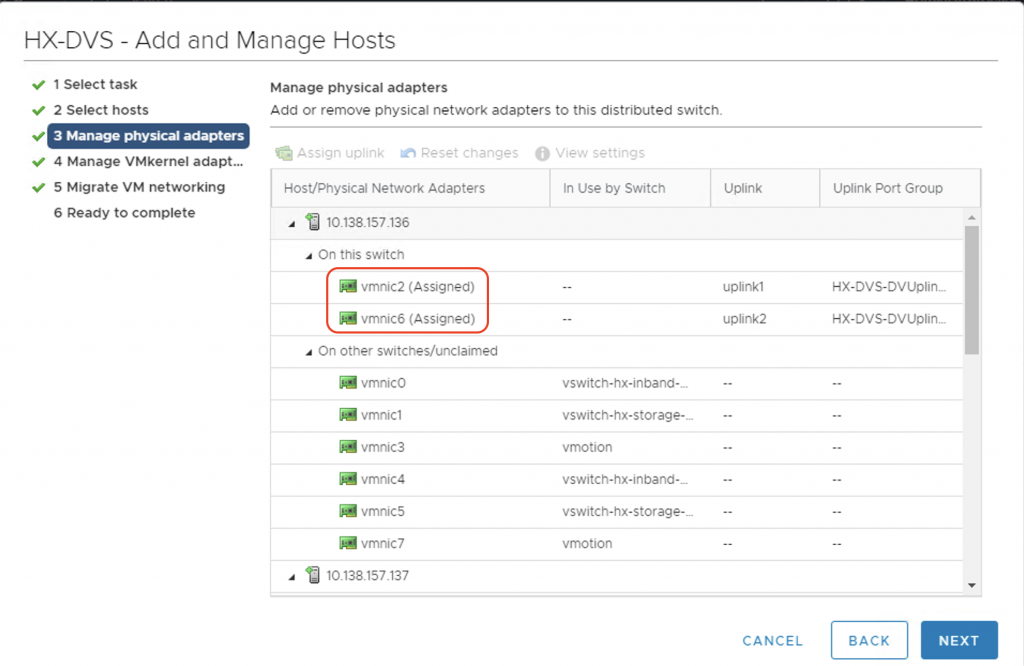

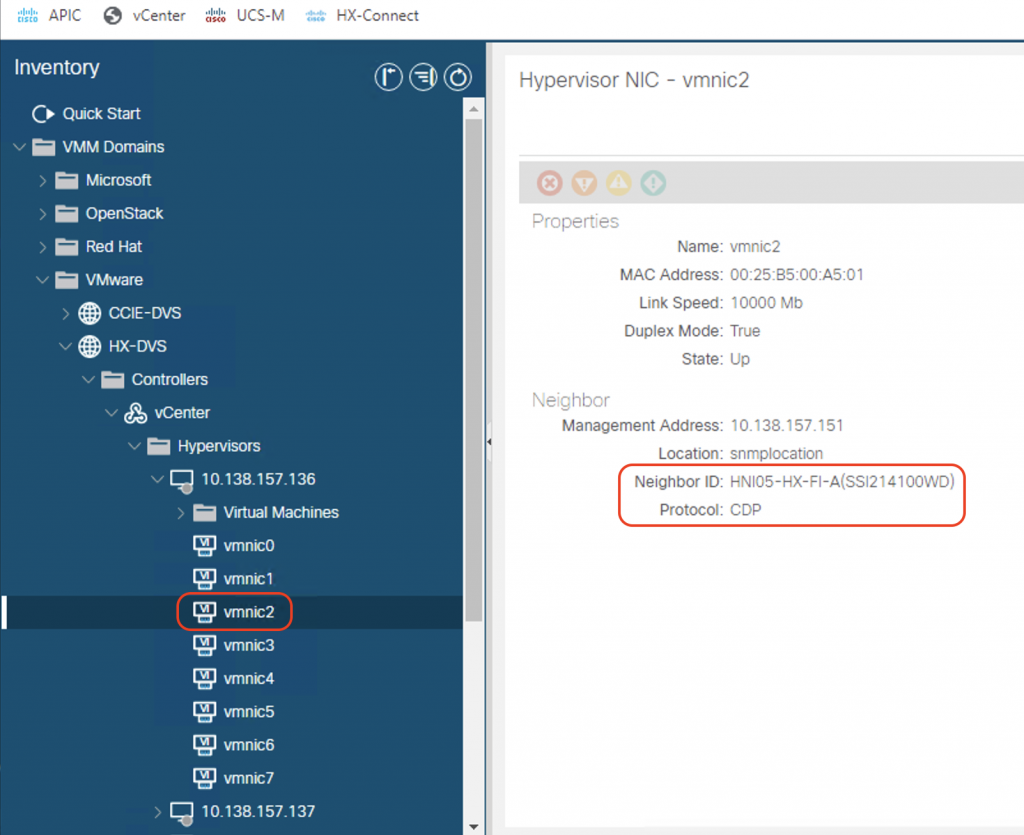

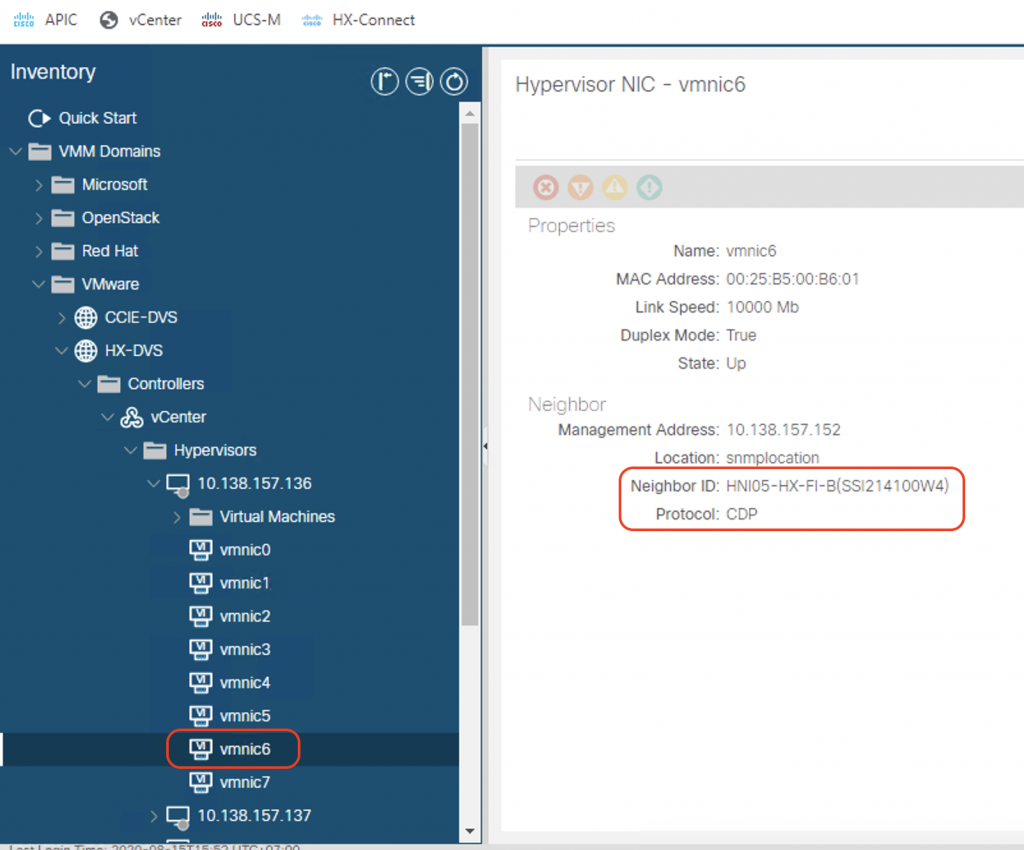

In the next step, choose all the vmnics carrying VM network traffic (vmnic2 and vmnic6 in this case) and assign them as uplinks. We need to do the same for all of the 3 nodes in the cluster.

If we do everything correctly, we can see that the ESXi hosts can now discover the Fabric Interconnects on the DVS uplinks using the discovery protocol of your choice in the VMM DVS configuration (CDP or LLDP). Verify it on all of the nodes in the cluster.

With the external switch manager, we can now see the list of the FIs (FI-A and FI-B) on the ACI VMM controller page:

Verification of the integration

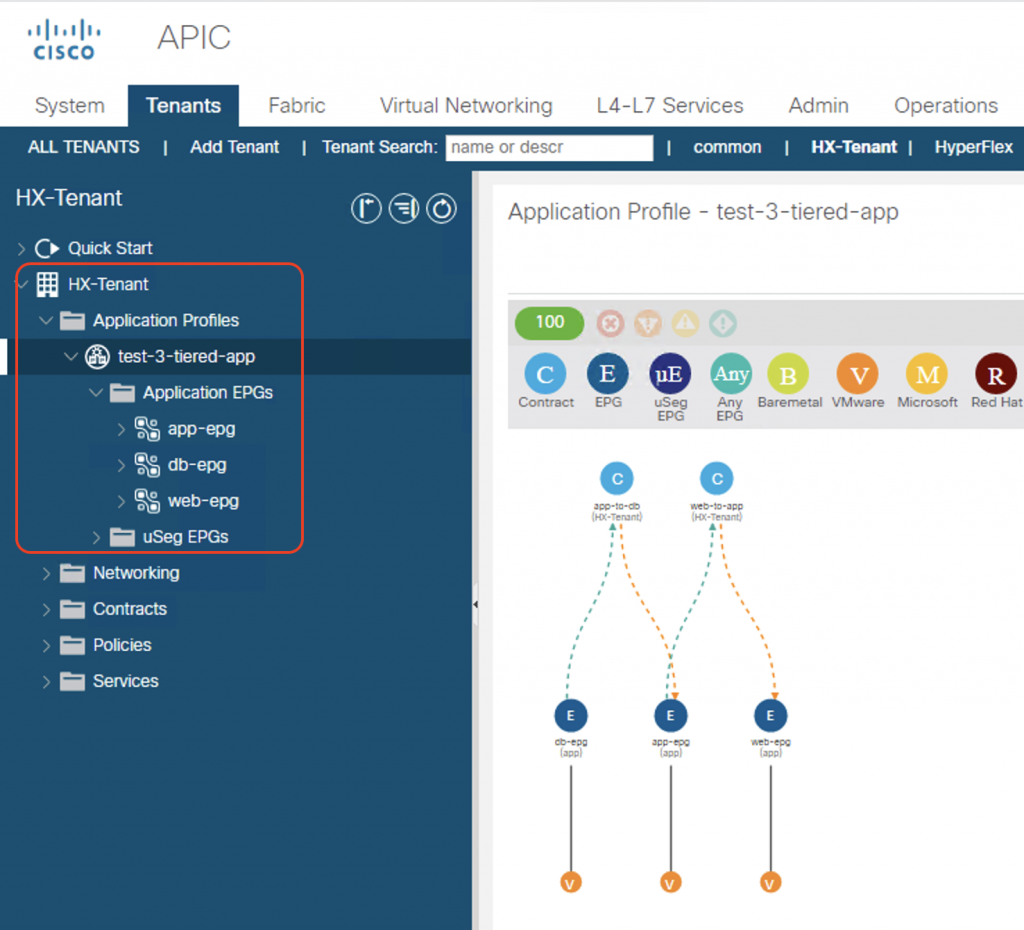

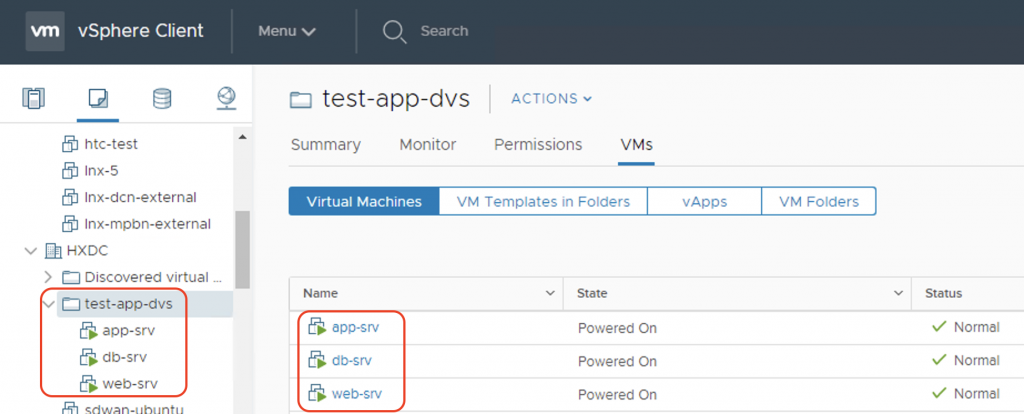

For a quick verification of the integration, let’s now deploy a sample 3-tier app on a test tenant named HX-Test. I will create 3 EPGs on ACI named web-epg, app-epg, and db-epg, and associate the VMM domains with all of these EPGs. I will also deploy two contracts: app-to-db contract, and web-to-app contract.

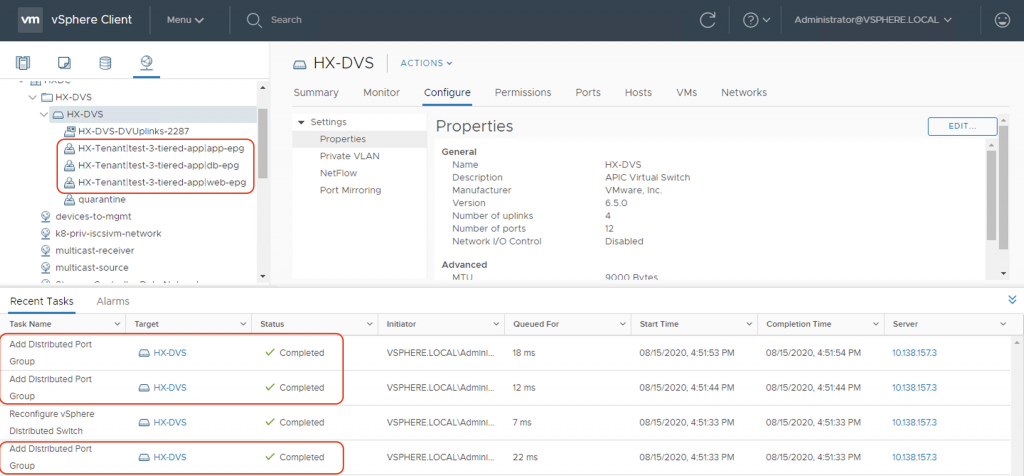

First thing we see when we associate the VMM domain to the EPGs is that ACI will automatically create all the port-groups on the HX-DVS distributed switch.

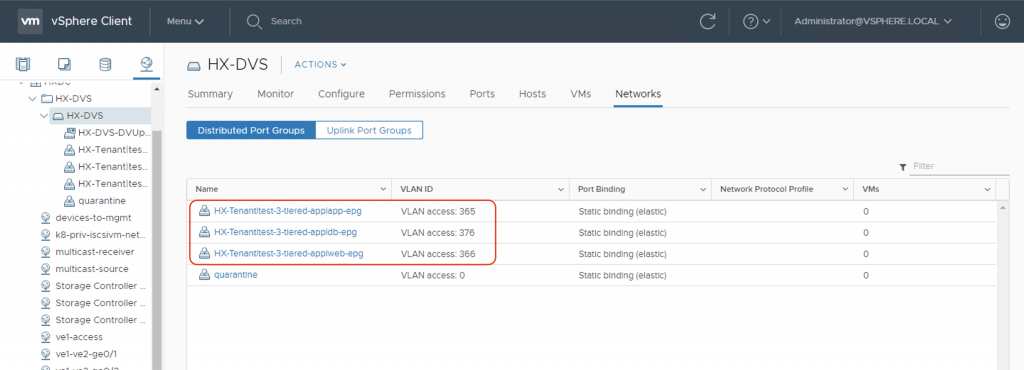

Now let’s examine the VLANs that ACI has picked from the dynamic VLAN pool associated with the VMM domain and assigned to the port-groups. We can see on vCenter GUI screenshot that in this case, the assigned VLANs are vlan-365, vlan-366, and vlan-376.

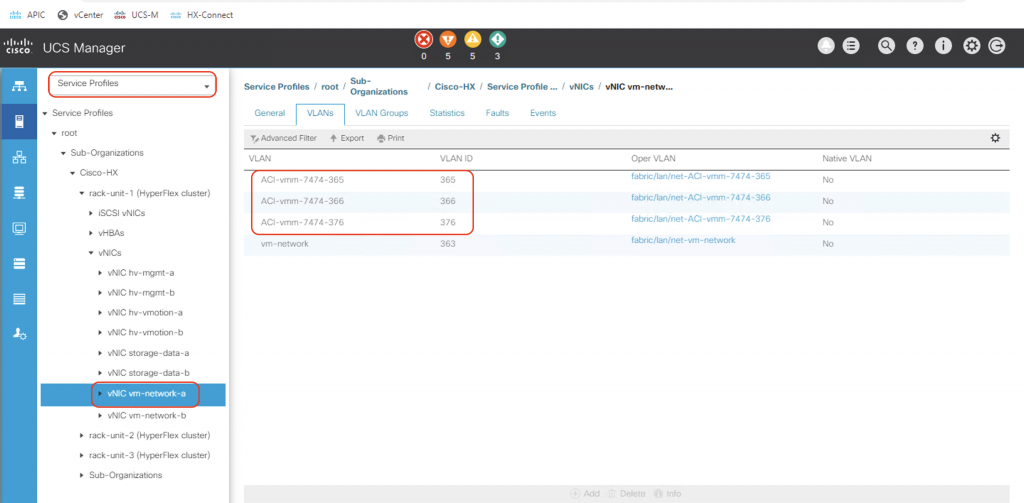

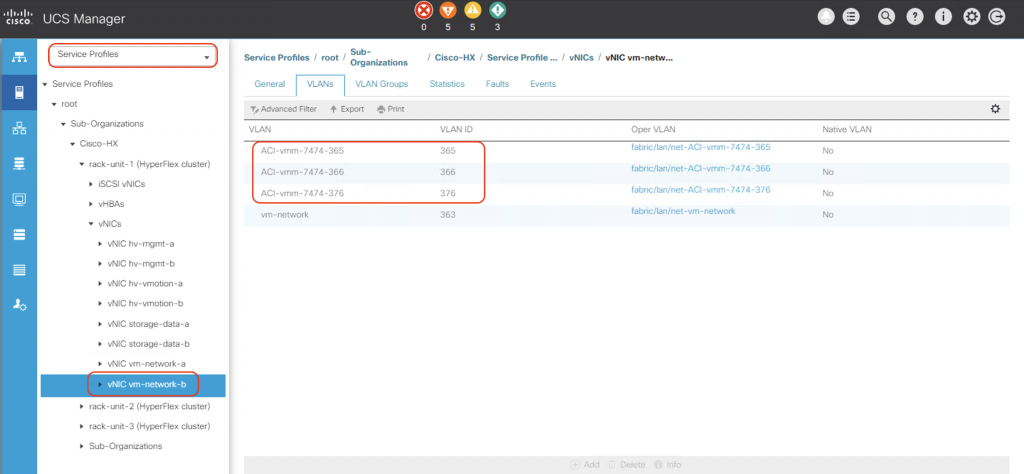

Now let’s go over to the UCS Manager, login with your admin credentials and open the associated service profiles for the 3 HX nodes. Examine the vNIC vm-network-a and vm-network-b information in each of these service profile. You will notice that ACI automates the creation of the necessary VLANs on these vNICs on all of the nodes in the HX cluster.

Voila! In the future if we want additional VLANs for the DVS port-groups, we can just add the VLAN blocks to the dynamic VLAN pool used for the HX DVS integration, and ACI will create them automatically on both UCSM and vCenter. We do not have to touch the post-install scripts on HX to create these VLANs manually anymore.

Next steps of the verification would be to deploy some VMs on the HX cluster, put them in the corresponding port-groups that ACI have created, and test connectivity among them to see if it complies to the contracts we have deployed on ACI. However those are outside the scope of this tutorial.

Have fun with your ACI integration!

Hi there

Werry fine toturial for the Hyperflex integration.

Do you know if there is a way to control the auto generated port-groups name in vmware ?

If you talk about VMWare port-groups name, it’s the same as typical VMM domain integration. The format as

Tenant | Application profile | EPG nameFor the autogenerated VLAN name on UCSM, it is fixed as

ACI-vmm-[VMM-domain-id]-[VLAN-ID], e.g. ACI-vmm-7474-300 for VLAN300.Hi GP

great article, thanks for that.

one Q:

i am running HX with 2 upstream facilities ( disjoint L2), one of them is ACI, the other is a pair of C9k5. the 3 HX-relevant vlans ( Data,mgmt,vmotion) are plain forwarded to the C9k5 facility. the actual client-VM traffic ( projects, around 100 vlans) will be forwarded to ACI. the forwarding decision is done via VLAN-Groups on the FIs. (1 for ACI, 1 for C9k5)

In this example, ACI needs to add the newly created vlans, selected from the vlan pool, not only to the vNICs but also to the right VLAN-Group. is it possible to configure this? its actually only one VLAN-Group (pinned to the ACI-uplink)

Thanks for your reply

best

chris

Hi Chris,

Sorry I was not able to look at my blog for quite some time. The integration only takes care of the connectivity between ACI and FI through the External Switch App and discovery protocol being used, and it’s only for VM tenant traffic. As long as you have seperate VLAN pools for the ACI to FI vs. C9K to FI, you’ll be ok. The DVS uplinks on ESX must be different, and there will not be any integration/automation between the C9Ks and FI. It will fall back to the normal scenario.

Cheers,

Giang